Augmentative and Alternative Communication AAC Devices for Complex Communication Needs Empowering Individuals with Severe Speech Impairments

Augmentative and Alternative Communication (AAC) Advances: A Review of Configurations for Individuals with a Speech Disability

The integration of smart developments into daily life activities has widened the scope of dedicated and non-dedicated AAC applications [7,19]. A survey of high-tech AAC devices with regards to the signal acquisition, ML, and output generation is presented in this section.

3.1. AAC Signal Sources and Associated Processing

AAC interfaces are activated through an array of methods for the detection of human signals generated via body movements, respiration, phonation, or brain activities [4]. The acquisition of AAC signals is accomplished through several modalities. outlines the AAC signal sensing categories discussed in this review together with their relevant activation methods. The listed AAC access methods could be used in a stand-alone format or in combination with one another. For example, imaging methods may be combined with touch-activated methods or mechanical switches to provide the users with a multi-modal access using the same device. A commercial example is Tobii Dynavox PCEye Plus, which combines several functionalities including eye tracking and switch access to use a computer screen [20].

Table 1

Sensing modalities of AAC signals.

| Signal Sensing Category | Activation Method |

|---|---|

| Imaging methods | Eye gaze systems, head-pointing devices |

| Mechanical and Electromechanical methods | Mechanical keyboards, switch access |

| Touch-activated methods | Touchscreens, touch membrane keyboards |

| Breath-activated methods | Microphones, low-pressure sensors |

| BrainComputer Interface methods | Invasive and non-invasive |

3.1.1. Imaging Methods

Imaging methods, such as eye gazing, eye tracking and head-pointing devices, have been widely reported in the literature [21,22,23,24,25,26,27,28,29,30,31]. Eye gaze technologies work using the principle of tracking the eye movements of a user for the determination of the eye gaze direction [24,27]. Several eye tracking methods are commonly used, including video-oculography [32], electro-oculography [33], contact lenses [34], and electromagnetic scleral coils [21,25,30,35,36]. Oculography is involved with the measurement and recording of a users eye movements [35]. Video-oculography and electro-oculography use video-based tracking systems and skin surface electrodes, respectively, to track the movements of the eye [25]. In the context of AAC, non-invasive eye tracking methods are better suited to address the daily needs of the users who lack motor abilities [27,29]. Practical methods involve the utilization of non-invasive cameras, an illumination source, image processing algorithms, and speech synthesizers to communicate a users message [25,27]. Image data are obtained in video-oculography-operated systems using one or more cameras [23,27]. Typical video-oculography systems use glints produced on the surface of the eye through an illumination source, such as near-infrared (NIR) LEDs with typical wavelengths of nm, and in turn, gaze locations are estimated from the movement of the eye pupil in relation to the illuminated glint positions [34].

The components of a typical video-based tracking system are shown in . Different approaches are presented in the literature for calculating the accuracy of an eye tracking system, including the distance accuracy (in cm or in pixels) and the angular accuracy (in degrees) [22]. The pixel accuracy can be given by

(1)

where and are the coordinates of the target points, and and are the gaze point coordinates given by

(2)

and

(3)

respectively, with the subscripts and referring to the coordinates of gaze points of the left and right eyes. The on-screen distance accuracy () is similarly given by

(4)

where is calculated based on the resolution, height, and width of the screen, and are the pixel shifts in the directions of x and y, respectively, and the is the distance between the eye tracking unit and the lower edge of the screen [22,37]. The angular accuracy () can be also computed via

(5)

where the gaze angle is given by

and dist and meandist are the distances from the eye to the screen and from the eye to the tracker, respectively [22,37].

Components of a typical eye gaze system, adapted from [22,38]. The optical and the visual axes are used for the calibration process commonly required to set up the eye gaze system [22,39].

Fixations and saccades are commonly used to analyze eye movements [40]. Fixations are the pauses a user intently inputs by fixing his eye movements at the target gaze point, whereas saccades are the eye movements rapidly occurring following and in between the fixations. Metrics of eye gaze estimations include fixation durations, fixation rates, fixation sequences, saccadic amplitudes and velocities [22,40]. Although electro-oculography is a cost-effective eye tracking method, Infrared pupil corneal reflection (IR-PCR) video-based systems are most commonly used by speech and language practitioners due to their non-invasive nature [25,27]. A calibration operation is essential in video-based trackers to fine-tune the system with a users eye movements [41]. As shown in , a users visual axis deviates from the optical axis upon the usage of a gaze system. Calibration is expressed as the process of finding the visual axis pertinent to each user by calculating the angle between the line joining the fovea (the highest point of sensitivity in the eye retina) with the center of corneal curvature, and the optical axis [22].

The estimation of the visual axis is usually not feasible, and as such, the calibration process enables the tracker to capture and learn the difference between the users eye positions when gazing at a specific target in comparison to the actual coordinates of the gaze target. The users head orientation should be also considered in IR-PCR systems, as the movements of the users head can adversely impact the calculations of the glint vectors [22]. Studies are however addressing advances in eye tracking methods to overcome the related constraints, providing the forthcoming possibilities of free IR eye tracking and robust algorithms for head movements compensation [42].

A large number of eye tracking and eye gaze AAC applications is commercially available. Several AAC eye gaze and eye tracking applications, such as Tobii Dynavox PCEye Plus [20] and Eyespeak [43], can be accessed in a multimodal form. This enables the users to use other methods of input, such as switch access, headtracking or touchscreens together with the tracking software to suit their individual needs. IntelliGaze (version 5) with integrated communication & environment control [44] is also an example of an eye tracking AAC tool which allows sending and receiving messages for an improved communication. Most of the listed solutions include extensive vocabulary sets, word predictions, and advanced environmental controls for an enhanced support of the user. Other eye tracking systems, such as EagleEyes [45], allow the control of an on-screen cursor via the electrodes placed on the users head to aid the communication of users with profound disabilities [31].

3.1.2. Mechanical and Electro-Mechanical Methods

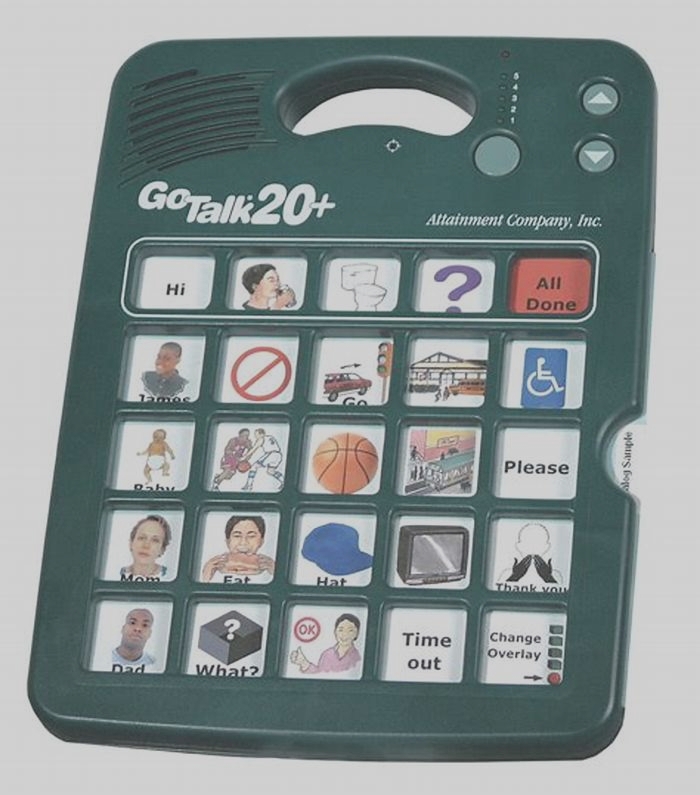

Mechanical and electro-mechanical AAC devices have applications for both direct and indirect selection access methods. Direct selections offer the users sets of choices, and require a voluntary input selection of the intended messages from the users side. This usually involves the coordination of voluntary controls using a body part, such as the hand or fingers, or a pointing device, to select a message [19]. Mechanically activated direct-selection methods include mechanical keyboards, which utilize the physical mechanical depression of the pressed keys to activate a user selection. Keyboard layouts maybe reconfigured for individuals who find the use of a standard keyboard difficult due to the required coordination between the two hands [4].

For individuals lacking voluntary controls, communication via direct selections is often cumbersome, and, consequently, indirect selection methods are best-suited for this group of users [19]. Scanning methods are predominantly in use with indirect selections, involving a systematic representation of options appearing in timed intervals for the users to select from [19,46]. Mechanical scanning methods include single switches, arrays of switches, or other variations of methods activated via the application of a force [4]. Switches are generally considered a form of low-tech AAC due to their minimal hardware requirements; however, switching applications have recently expanded to allow users the access of several high-tech AAC platforms, including computers, tablets, or smart devices via scanning. Scanning techniques range across three levels, each suited to accommodate users with specific motor abilities: Automatic scanning is used to present items in adjustable time intervals, based on the users skills, until a selection is made; step scanning allows the users to control the presentation of selections, in turn controlling the rate of advancement; and inverse scanning involves holding down a control interface and releasing it upon the desired selection [4]. shows a visual scanning interface together with typical activation switches.

(a) A sample visual scanning interface activated via switch scanning. The yellow box moves vertically across the lines until a selection is made, followed by a gliding green box moving horizontally across the highlighted line until a letter is also selected. In (b), two scanning button switches are displayed.

In addition to letters, scanning interfaces expand to include a variety of access options, including icons, pre-stored messages, and auditory messages. Some operating systems also provide the option of device navigation via an external switch. The position and access methods of switches are user dependent. They can be adjusted to be in close proximity to the hands or the feet for the ease of activation. Mechanical switches can be also mounted on wheelchairs to allow access using head movements. Different variations of switches are available in terms of shapes and types to suit the users requirements. In general, mechanical switch scanning requires minimal motor movements; however, the communicative rates could be slowed down by the delay required to make a selection. Nonetheless, based on the requirements of some AAC user groups, indirect access methods utilizing switch scanning may still aid in the communication of basic needs. As implied from the HAAT model, the users requirements specify the objectives of using a communication aid. Therefore, the independent communication of these user groups could be among the primary targets of using an intervention.

3.1.3. Touch-Activated Systems

With the escalation of the touchscreen developments, touch-activated AAC applications are commonly in use with AAC direct selection activation. Touchscreen technologies comprise various types, including resistive, capacitive, surface acoustic wave, and optical/infrared touchscreens [47]. Resistive and capacitive touchscreens are predominantly used with smart devices [48]. Resistive touchscreens are dependent on the production of a force or pressure using the users fingers, whereas capacitive touchscreens are activated using the electrical charge present on the users finger [49]. Although resistive touchscreens are cost efficient, capacitive touchscreens are often known to present a better visual clarity, presenting an added benefit for AAC users suffering a degree of visual impairments. Touch membrane keyboards are also in use by AAC users. They are built using non-conductive spacers separating conductive flat surfaces, and acquire electronic signals through the pressure resulting from holding down a key, generating an input signal to the AAC device [19].

Several AAC touchscreen applications, such as Verbally [50], Proloquo2Go [51], and PredictableTM [10,52], are currently available for the use with tablets and smart devices for a rapid and portable access to an AAC solution. The tools operate based on a variety of activation methods, primarily including image-based solutions and word spelling for synthesis via the devices inbuilt text to-speech capabilities and speech generation, as shown in . The interfaces of the applications could be usually tailored to allow users the flexibility of setting up the devices according to their needs. The costs of the solutions vary according to several factors, including the capabilities of the tool and the sophistication of the software. AAC users utilize touchscreens and touch activated systems to make selections via swiping and tapping; however, such actions could be restrictive for users who are physically impaired [4]. Nonetheless, the accuracy can be augmented using pointers, as the icons presented on a touchscreen often have the advantage of being cognitively easy to select, and less demanding in comparison to the operation of a regular computer [4].

Examples of (a) a dedicated touch-based device and (b) a non-dedicated smart device running an AAC application (APP), usually with predictive language model and speech generation capabilities.

3.1.4. Breath-Activated Systems

The wide availability of sensing modalities expands the scope of AAC control interfaces to include the detection of respiratory signals in addition to the regular voluntary body movements [4]. Voluntary body movements are commonly detected through the integration of sensors with imaging, and/or optical, mechanical, and electro-mechanical devices. Respiration signals are recorded via a wide range of modalities, including fibre optic sensors [53], pressure and thermal sensors [54], photoplethysmogram (PPG) measurements [55], electroencephalogram (EEG) signals [56], and the examination of airflow [56,57]. Discrete and continuous breathing signals can be used to encode messages, as shown in . Discrete breath encoding involves the generation of soft and heavy breathing blows encoded as binary combinations of zeros and ones, or Morse codes to represent the users intended messages or the International Morse codes letters, respectively. On the other hand, continuous breath encoding uses the modulation of the speed, amplitude, and phase of breathing signals to create patterns representing the intended message. The modulation of the continuous breathing patterns encoded to represent user selected phrases, including the training and retrieval modes, is shown in for a mobile based APP.

Examples of (a) discrete breath encoding, where soft and heavy breathing blows are recorded to encode combinations of zeros and ones, or Morse codes, representing the intended messages, and (b) continuous breath encoding, where the speed, amplitude, and phase of breathing are modulated to create patterns representing the intended message.

Examples of (a) training mode, and (b) live mode of continuous breath encoding for the storage and the retrieval of breathing patterns linked to a user phrase using a mobile APP.

An early respiration activated AAC development involving a breath-to-text application was initiated at the Cavendish Laboratory at Cambridge University [58]. The study presented the use of fine breath tuning to use Dasher to support the communicative requirements of AAC users. Dasher is a text-entry system with a predictive language model available on several operating systems, and uses one- and two-dimensional inputs from pointing devices to access an on-screen cursor. The fine breath tuning system encodes letters using Dashers interface and a specially designed thoracic belt worn around the chest. Two inches of the belt are replaced by an elastic material, with a sensor measuring the changes of a users waist circumference resulting from breathing variations. The study reports an expert user conversational rate of 15 words per minute using this system. The usage of sniffing signals was also established in the scope of AAC in [59]. A device was developed for the measurement of human nasal pressure via a nasal cannula and a pressure transducer. The device was tested with individuals in LIS, and quadriplegic users. To write text, the captured nasal pressure changes are converted into electrical signals, and passed to a computer. The device comprises two associated interfaces for the users selection of letters, including a letter-board interface, and a cursor-based interface. The system aids the users in LIS, with reported rates of three letters per minute.

Microphones could be also used in combination of an AAC interface. The loss of speech abilities associated with SLCN centralizes the usage of microphones around two AAC areas, including speech augmentation of individuals suffering partial loss of speech [60] and breath encoding for individuals with a speech disability [57,61]. Speech augmentation applications, such as Voiceitt [62], are currently researched to aid the communication of individuals suffering from Dysarthria or using non-standard forms of speech. Voiceitt uses a specialized software and the inbuilt capabilities of a portable device to understand dysarthric speech and allow a real time user communication. On the other hand, breath encoding is being researched to aid the communication of the users lacking speech abilities. Encoding distinct inhalation and exhalation signals is presented in [61] to produce synthesized machine spoken words (SMSW) through soft and heavy blows represented through four-bit combinations of zeros and ones. The classification is achieved based on the threshold values of the generated blows. A micro-controller unit together with an MP3 voice module are appended to the microphone for the execution of the pattern classification and the playback of SMSW. The 16 discrete combinations are linked to predefined phrases selected with the aid of medical practitioners. A device named TALK is also a solution involving a micro-electro-mechanical-system (MEMS) microphone together with two low-cost micro-controllers, and is similarly in use with distinct inhalation and exhalation signals to encode letters through the International Morse Code to produce SMSW [2]. A study also reports the use of analog breath encoding for AAC purposes by utilizing the recognition of continuous breathing modulations [57]. Analog encoding of the acquired breathing signals is reported to provide an increased bandwidth at the low breathing frequencies, as it utilizes the signals amplitude, frequency and phase changes to encode a users intended meanings. The classification is achieved based on the dynamic time warped distances between the tested breathing patterns. A systematic reliability of 89% is reported with increased familiarity with the system.

3.1.5. BrainComputer Interface Methods

In the scope of AAC, BrainComputer Interface (BCI) solutions are being widely researched to allow AAC users to control external devices by modulating their brain signals [63,64,65]. Brain interfaces are either invasive or non-invasive. Invasive interfaces involve the usage of implanted electrodes and the interconnections of the brain with the peripheral nerves [64]. Non-invasive BCIs comprise the usage of external devices to monitor a users brain activities through EEG [60,64], magnetoencephalography (MEG) [63], functional magnetic resonance imaging (fMRI) [63,64] or near-infrared spectroscopy (NIRS) [63,64]. The components and flow diagram of a typical BCI system are shown in .

The components and flow diagram of a BrainComputer Interface (BCI) system, adapted from [66,67].

EEG is a popular BCI recording method, given its non-invasive nature and its relatively lower cost [68,69]. In electrical BCI systems, the brain produces a set of electrical signals when triggered by a stimulus, known as the evoked potential [70]. EEG signals are acquired using 264 sensors placed on the scalp of the user to record the brain activity [71]. Amplifiers and filters are typically utilized, with an output fed back to the user to accordingly modulate the brain activity [64]. To translate a brain activity into a computer command, regression and classification algorithms can be used [72]. An adaptive auto-regressive (AR) parameter estimation model used with EEG BCI describes a time series signal x(t) as

(8)

where and p are the AR coefficients and the order of the model, respectively, and is white noise [73,74]. A review study [72] demonstrates that the use of classification algorithms is an increasingly popular approach with BCI interfaces, as they are commonly used to identify the acquired brain patterns. Classification is the process of using a mapping f to predict the correct label y corresponding to a feature vector x. A training set T is used with the classification model to find the best mapping denoted by f* [72]. The classification accuracy of a model is dependent on a variety of factors. A study [72] demonstrates that, using the mean square error (MSE), three sources are identified to be the cause of classification errors, given that

could be decomposed into

(10)

where the variance (Var) represents the models sensitivity to T, the Bias represents the accuracy of the mapping f, and the noise is the irreducible error present in the system. Common ML algorithms used with BCI include linear classifiers (such as linear support vector machines), neural networks, nonlinear Bayesian classifiers, nearest neighbors, and combinations of classifiers [71,72]. Signal processing techniques pertinent to BCI methods include both time-frequency analysis, such as AR models, wavelets, and Kalman filtering, and spatiotemporal analysis, such as the Laplacian filter [75]. Hybrid BCI is a different approach to brain signals processing, combining a variety of brain and body signals in sequential and parallel processing operations with the aim of improving the accuracy of BCI systems [76].

BCIs are under continuous research to aid the communication of individuals suffering from motor strokes [63], ALS, and LIS, and spinal cord injuries [77]. BCI systems involve three basic pillars, including the user training, the associated ML, and the application in use [78,79]. Research in the area of BCIs is currently evolving [63], with promising results in recent state-of-the-art projects. A study by Stanford University [80] confirmed the usability of BCIs to control an unmodified smart device for quadriplegic users. BCIs have been also in use to surf the Internet [81], with an EEG BCI based application tested with LIS and ALS conditions [82]. It is also reported that BCIs could aid users control spelling and play games [80].

3.2. Machine and Deep Learning

Typical signal processing of the acquired AAC signals encompasses three primary operations: encoding, prediction, and retrieval [10]. Encoding involves the conversion of the acquired signal into a pre-defined format accepted by the system for the production of a specified output, whereas prediction is concerned with building the algorithms used to select the desired output [10]. Prediction encompasses several operational contexts, including word [83], message, and icon prediction [10]. In general, an ideal AAC system should integrate self-learning capabilities to respond to its users individual needs [2,8]. Demographic data show that current AAC users belong to numerous cultural and linguistic backgrounds [7]. In turn, the design of systems tailored to address specific users requirements is vital for an enhanced adaptability. High-tech AAC is hence becoming a highly interdisciplinary area of research, combining rehabilitation engineering with clinical and psychological studies, signal processing, and ML [84].

ML has been widely evolving over the last decade, with a number of applications aimed at aiding the provision of intelligent AAC solutions to address the users needs. The automation of algorithms, prediction, and classification capabilities presented by ML solutions could be of great benefit to the users. Technologies such as natural language processing (NLP) are highly dependent on artificial intelligence (AI). The operation of NLP is centered around the analysis, augmentation, and generation of language, including the computation of probabilities of incoming words and phrases, and complete sentence transformations [85]. NLP has various applications in AAC, utilizing ML and statistical language models to process and generate outputs by optimizing word prediction models, topic models [86], speech recognition algorithms, and processing of the context of usage [85]. BCI is also highly dependent on ML, as users learn to encode the desired intended messages through dedicated brain signal features captured by the BCI for the translation to the intended meaning or the desired control [78,84,87,88]. Recent studies also show that advances with DL algorithms, such as conventional and recurrent neural networks, could have a potential superior performance in comparison with conventional classification methods [70]. As demonstrated in [89], ML is also used in conjunction with ECG spelling based BCI applications to minimize training times, although the conversational rates are still generally reported to be slow [90]. On the broader scale, research in [91] demonstrates that Neural Networks could be potentially used to learn, predict, and adapt to the events within a users environment to aid the people with disabilities.